Could AI have written a better novel?

I lead data science at Klaviyo. Among other things, our group is building tools to help users write copy. I’m also the author of the science fiction novel The Insecure Mind of Sergei Kraev, which was published yesterday. So as I was writing, it was impossible for me not to wonder—could GPT-3, the language generation AI, have done a better job? Let’s dive right in.

The first few lines of my prologue are:

April 14, 2220

One hundred years after 4-17

Singapore Island

Children,

This is my ninety-fourth annual message.

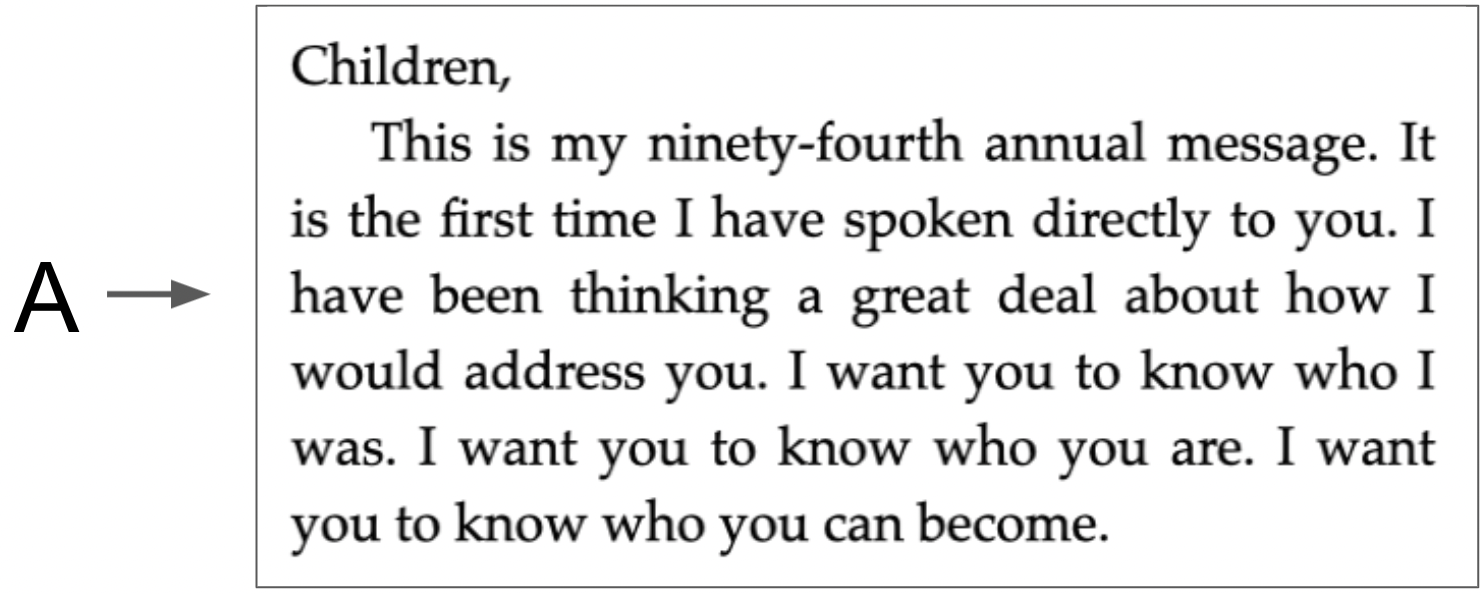

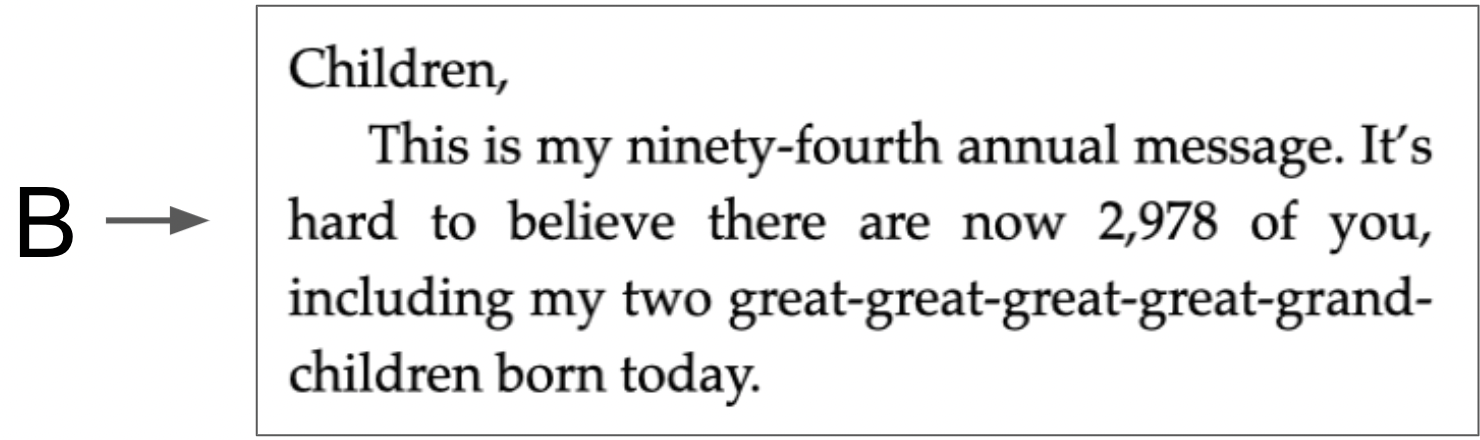

Which of the following opening paragraphs grabs you? One is the actual text from my novel written by me, a human. The other three were generated by GPT-3.

It’s incredible. All appear to be written by a human. But even crazier, more so than correct grammar, is that all four make me want to know what comes next. This isn’t just an AI stringing together a bunch of words that your mind registers as correct English, but when you read closely, is nonsensical. Concepts are being conveyed. An idea is planted and I for one want to know what happens. Like in C), why is “Singapore Island” under siege and why are we in the “long twilight of our species?”

To my eyes no paragraph is the clear winner. Paragraph B) is the one from my novel, the only one written “by hand” by a human being. You could argue that it’s the least intriguing of the four, which means that, at least for the opening paragraph, GPT-3 beats me at writing my own novel.

(You may be wondering if there was any human editing or selection above—like did I generate a hundred examples and pick the best three? No. The only thing I did was give GPT-3 the first few lines of my prologue shown above as a prompt. I then generated text three times. Paragraphs A), C) and D) are the first paragraphs of the generated text from each of the three runs. I did not tweak the prompt, adjust settings, or discard bad output.)

Of course a novel needs more than an opening paragraph. Mine is a whole story about a humble engineer who dreams of becoming a professor but gets mixed up with the charismatic and beautiful leader of a K-Pop cult. Everything fits together and the events that take place in the early chapters build towards scenes in later chapters. Can an AI do that? Can it maintain a plot over hundreds of pages?

Well, how about over a few pages? Here are the next few paragraphs that were generated for run A). I didn’t touch the text or remove/add paragraph breaks.

There’s so much to say about it. Now it gets a bit tedious for a prologue towards the end, but still, the whole thing hangs together. There are thought-provoking ideas like: “I was born into an age of miracles, but I became President in an age of wonders.” The closing paragraphs read almost like a religious text, perhaps with some type of call and response between leader and congregation. And the pairs all work: destiny to possibility, limits to infinity, humanity to divinity, darkness to light...I want to live in that world. The AI also maintains math consistency between making the letter writer born in 2029 and being forty-five years old when sworn in as president in 2074. I don’t know about being a great-grandmother at forty-five though...possible but just barely!

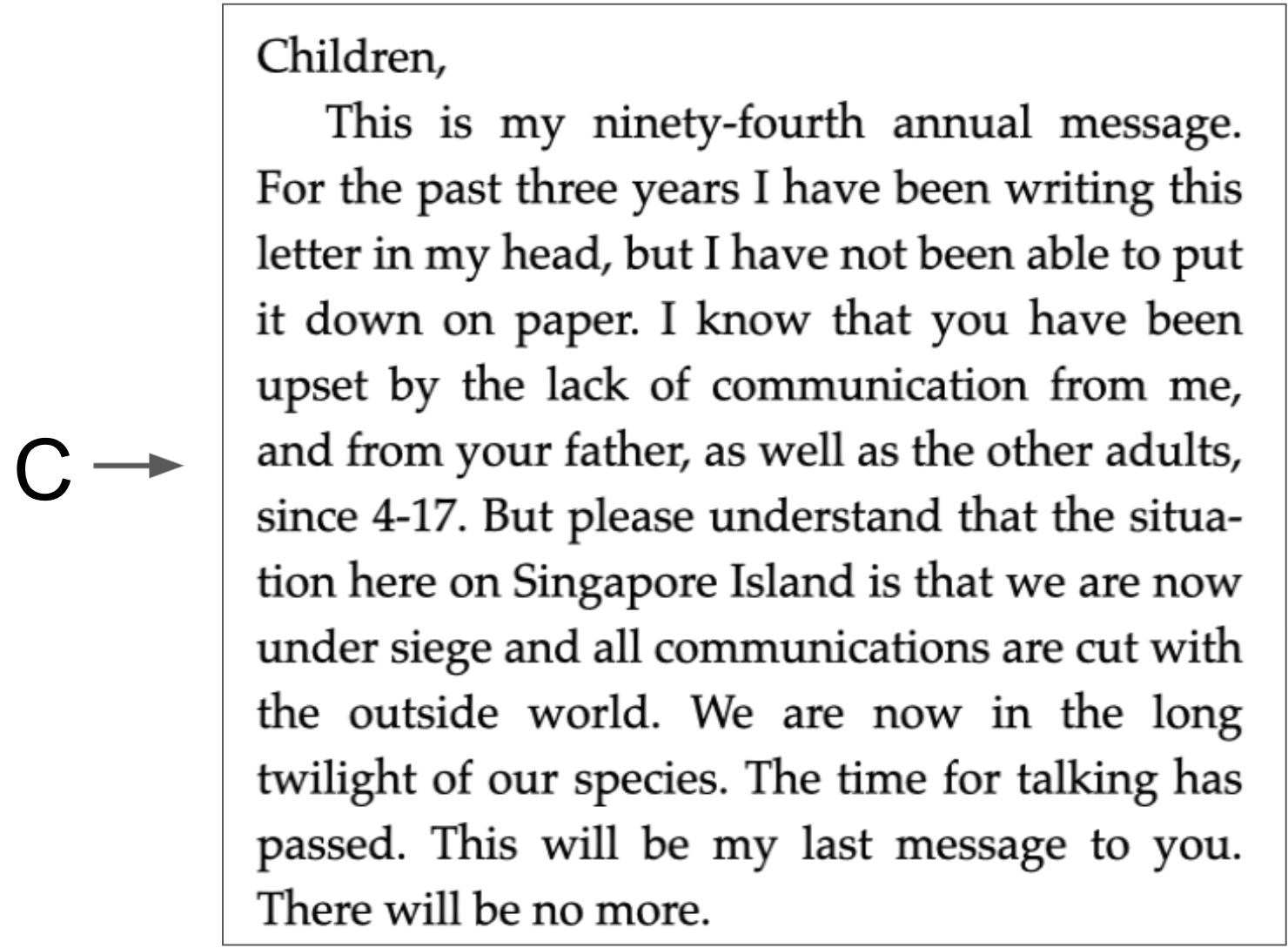

Let’s look at C), which from the first paragraph sets up a much more specific scenario.

It hangs together. It holds my interest. It carries forward the whole idea that something important happened on 4-17 (a key element of my actual text that it picked up only from the prompt). The AI “decided” that the author and recipient last met in Fukuoka, a real place in Japan. The first sentence that even makes me question if things are logical is the confusion about who is being rescued—is it the author and people in Singapore, or the recipient of the letter? And then the “I am not writing this letter on this typewriter” seems odd, but again, maybe it’s all just part of the mystery.

Here’s all the text generated for D).

This all sort of makes sense. And raises so many delicious questions—why was the island abandoned? What happened to the Mars mission? What happened to everyone on Earth? And what is this waterbear thing? According to Wikipedia, “Tardigrades, known colloquially as water bears...are among the most resilient animals known, with individual species able to survive extreme conditions—such as exposure to extreme temperatures, extreme pressures (both high and low), air deprivation, radiation, dehydration, and starvation—that would quickly kill most other known forms of life.” So that too makes some type of sense, at least as much sense as is required for a science fiction story.

Okay, so the AI is writing, but is it thinking? It's tempting to say no—the AI is just copying patterns, going for the most likely next token, and the token after that, and so on, plus or minus enough randomness so each run generates something different. But how different is that from what we humans do when we think?

We humans have a hard time thinking about anything complex without forming sentences. And as soon as you start forming sentences, you're mostly copying patterns. You almost can’t help but maintain grammatical consistency with what your brain knows about language in general and logical consistency with the words and sentences you’ve just formed as part of “verbalizing” your thought.

But thinking is more than that. There is a deliberate component. Perhaps you have some core, inner, not-yet-verbalized thought and it directs the sentence you create. Take a common but not so interesting thought. When you think “I need to go to the bathroom,” it’s not just that something compels you to start thinking “I need to go to” and then your mind flips a coin and selects “bathroom” as a high probability way to end that sentence. It’s that you have an inner biological urge, and it guides you to utter that sentence, and end it with “bathroom” and not “supermarket.”

Is the AI doing that? It has probabilities and randomness (as do we), but are the sentences it writes conveying some pure inner thought? Is that the difference between human thought and GPT-3? I hope so.

Now back to writing a novel...can the AI bring the story home? Can it bring all the threads to a logical and satisfying conclusion? Could it do that across dozens of chapters and tens of thousands of words? Could it even do that for a short story of a few pages? GPT-3 only lets you generate a certain amount of text and the examples above are close to the max. However, I suspect that even if I could generate ten pages, the stories started above would not resolve, and would not maintain reader interest.

And yet that may not be far off. An AI is already able to carry concepts forward through a few paragraphs of text. Could it keep an even broader structure “in mind,” a “macro level attention model,” and then fill in a whole book? Could I give a beginning and an ending and it finds words to fill in the middle that satisfy being interesting and logical? Doesn’t seem crazy. (And perhaps it’s arrogant for me as the human to think I should get to define the ending. Maybe the AI will do a better job of that too.)

One of the ideas in my novel (the actual text I wrote) is that humans have developed technology (AI Fact Checking) and regulation (Board of Reality Overseers) to squash conspiracy theories and stop fake information from spreading. Seeing how good AI text generation is getting makes that seem even more important. We have a tendency to believe what we read, and unethical human actors take advantage of that by flooding social media with stuff they make up. What happens when AI lets these bad actors generate an unlimited volume of text? If 99.9999+% of text on the Internet is fake, we’ll need systems to tell us what needles in the haystack we can trust.

Circling back to the question posed in my title, could GPT-3 have written a better novel, my answer is for the prologue is yes, or at least it’s a tie. But for the whole novel—no. By the time you get a few pages in my human-written novel wins.

If you’re intrigued by ideas like AI Fact Checking, what the world might be like eighty years from now, or how a humble engineer gets mixed up in a K-Pop cult, read (or listen to) The Insecure Mind of Sergei Kraev. If you like it—great! And if you don’t like it perhaps you’ll tell me I should have let GPT-3 be the author. (And I'll go off, relax on the beach, enjoy my UBI, read some AI-authored novels...until the AI tells me to start pedaling a stationery bike and generating electricity for it.)